Durante los últimos años, la introducción de nuevos fármacos inmunosupresores ha permitido reducir la tasa de rechazo agudo y mejorar de forma muy significativa los resultados del trasplante renal a corto plazo. Sin embargo, esta mejoría no se ha traducido en cambios tan significativos en los resultados a largo plazo, de tal forma que el fracaso tardío del injerto sigue siendo una causa frecuente de reingreso en programas de diálisis y de reentrada en la lista de espera. Múltiples agresiones de origen inmune y no inmune actúan de forma conjunta y conducen a la disfunción crónica del injerto. Las características del órgano implantado son un determinante mayor de la supervivencia del injerto y, aunque se han diseñado diversos algoritmos para conocer el riesgo del órgano a trasplantar y poderlo asignar al receptor más adecuado, su aplicación en la clínica es todavía excepcional. Por otra parte, caracterizar en cada paciente los factores inmunes (rechazo clínico y subclínico, reactivación de infecciones virales latentes, adherencia al tratamiento) y no inmunes (hipertensión, diabetes, anemia, dislipemia) que contribuyen a la disfunción crónica del injerto puede permitirnos intervenir de forma eficaz para retrasar la progresión de este proceso. Por lo tanto, identificar las causas de fracaso del injerto y sus factores de riesgo, aplicar modelos predictivos e intervenir sobre los factores causales pueden ser algunas de las estrategias para mejorar los resultados de trasplante renal en términos de supervivencia. En esta revisión se analizan algunas de las evidencias que condicionan el fracaso del injerto, así como los aspectos terapéuticos y pronósticos relacionados con este: 1) Magnitud del problema y causas de fracaso del injerto; 2) Identificación de los factores de riesgo de fracaso del injerto; 3) Estrategias terapéuticas para disminuir el fracaso del injerto; y 4) Predicción del fracaso del injerto.

The introduction of new immunosuppressant drugs in recent years has allowed for a reduction in acute rejection rates along with highly significant improvements in short-term kidney transplantation results. Nonetheless, this improvement has not translated into such significant changes in long-term results. In this manner, late graft failure continues to be a frequent cause of readmission onto dialysis programmes and re-entry onto the waiting list. Multiple entities of immunological and non-immunological origin act together and lead to chronic allograft dysfunction. The characteristics of the transplanted organ are a greater determinant of graft survival, and although various algorithms have been designed as a way of understanding the risk of the transplant organ and assigning the most adequate recipient accordingly. They are applied in the clinical setting only under exceptional circumstances. Characterising, for each patient, the immune factors (clinical and subclinical rejection, reactivation of dormant viral infections, adherence to treatment) and non-immune factors (hypertension, diabetes, anaemia, dyslipidaemia) that contribute to chronic allograft dysfunction could allow us to intervene more effectively as a way of delaying the progress of such processes. Therefore, identifying the causes of graft failure and its risk factors, applying predictive models, and intervening in causal factors could constitute strategies for improving kidney transplantation results in terms of survival. This review analyses some of the evidences conditioning graft failure as well as related therapeutic and prognostic aspects: 1) magnitude of the problem and causes of graft failure; 2) identification of graft failure risk factors; 3) therapeutic strategies for reducing graft failure, and; 4) graft failure prediction.

INTRODUCTION

Kidney transplantation (TX) constitutes the treatment of choice for patients with End-stage renal disease (ESRD), since it is associated with a greater rate of patient survival, improved quality of life, and lower economic cost than renal replacement therapy with dialysis.1 In the last few decades, this type of treatment has become progressively more accessible to a greater number of patients, such that in Spain, approximately half of all patients with ESRD have functioning TX.2 Current registries from several countries have confirmed the progressive improvement in short-term TX results. Currently, the incidence of acute rejection is <15%, and graft survival at one year in >90%. In contrast, the evaluation of long-term results has been difficult to interpret, since contradictory data have been reported.3, 4 The rate of graft loss following the first year post-transplant is situated between 3% and 6% per year, and return to dialysis following graft failure is one of the most common causes of starting dialysis programmes and re-entering the organ wait list.5

In recent years, we have seen changes in the demographic and comorbidity characteristics of TX donors and recipients that undoubtedly have influenced these results.6 On the other hand, new immunosuppressant drugs have been introduced into the market that have allowed for reducing the rates of episodes of acute rejection. In addition, patients with TX have increasingly been prescribed medications with potentially protective effects for the heart and kidneys (anti-hypertensives, statins, and anti-platelets), which could modify progression towards renal failure.

In order to evaluate late graft failure, we need adequate information regarding the causes of graft failure, which requires histological analyses of the allograft, once vascular and urinary tract issues are ruled out. The international classification system proposed by the Banff group7 has been modified several times since its introduction in 1991 to incorporate newly acquired knowledge.8-11 During the 1990s, chronic transplant nephropathy (CTN) became the primary cause of graft failure,12, 13 but the low specificity of this diagnosis has limited the analysis of causes of graft failure. In addition, during recent years, more sensitive techniques have become available for detecting HLA antibodies, which have contributed to characterising the role of antibody-mediated rejection.14

As such, extending graft survival following TX has become a clinical priority. With this in mind, an understanding of the causes of graft failure, identification of the risk factors that influence this entity, application of predictive models, and interventions for treating its causal factors could be beneficial strategies for optimising TX results.

In our review, we consider the aetiopathogenic evidence available for graft failure, and the therapeutic and prognostic aspects of this phenomenon under the following sub-headings of clinical relevance: 1) magnitude of the issue and causes of graft failure; 2) identification of risk factors; 3) therapeutic strategies for minimising graft failure and 4) predicting graft survival.

1. MAGNITUDE OF THE ISSUE AND CAUSES OF GRAFT FAILURE

Currently, no Spanish registry of TX is available that provides precise information regarding the rates and causes of graft loss. As such, we will extract our information from international registries, autonomic registries, and one Spanish study,6, 15 which evaluated cohorts of patients receiving transplants in 1990, 1994, 1998 and 2002, a total of almost 5000 individuals who reached one year post-transplant with a functioning graft (Spanish Group for the Study of Chronic Transplant Nephropathy [GEENCT]).16 Until only recently, live-donor transplantation was a rarity in Spain, and so national long-term results are not available.

New immunosuppressant agents were introduced into clinical practice during the late 1990s and start of the new millennium, accompanied by decreases in acute rejection rates from 40%-50% to 10%-15%. In addition, graft failure due to acute rejection has become quite an uncommon cause of graft loss in patients with a low immunological risk (1%-2%).17, 18 Currently, in the first year following TX, the primary cause of graft failure is related to complications of the surgical procedure, especially in the form of vascular thrombosis (2%-5%).17, 18 The use of non-heart beating donors and expanded criteria donors has been associated with a significant percentage of cases of primary graft failure, which can reach 20% in cases of non-heart beating donations.19

In order to evaluate the impact of new immunosuppression regimens on transplant results after 1 year, we must compare cohorts of patients who received transplants during each individual era of immunosuppression therapy. Data from an Australian registry,20 which compared cohorts of patients who received transplants between 1993 and 2004, demonstrated a decrease in acute rejection rates from 40% to 23%, which was accompanied by an improvement in graft survival from cadaveric donors after 1 year (85% vs 90.2%) and after 5 years (69.9% vs 76.7%). The results for European patients provided by the Collaborative Transplant Study (CTS)21 suggest a very significant increase in mean graft survival (12.5 years for 1988-1990 vs 21.8 years for 2003-2005). In contrast, the improvements in long-term transplant results in the United States have been more modest, with increases in mean graft survival from 6.6 years in 1989 to 8.8 years in 2005 for cadaveric donations. At any rate, the data from this registry reveal a decrease in annual graft loss from 1989 to 2005 of 6%-8% to 4%-7%, during the first 10 years post-transplantation. In addition, if we exclude patient death with a functioning graft from the analysis, this rate improves from a 4%-6% rate of annual graft loss to 2%-4% for standard donors.22

In Spain, data from the Catalonian registry revealed that between 1984-1989 and 2002-2009, short-term and mid-term results have seen great improvement.5 Survival after one year increased from 78.1% to 89.4%, while 5-year survival increased from 58.1% to 76.7%. This indicates that the annual rate of graft loss between the 2nd and 5th years has reduced from 4% to 2.5%. In contrast, the GEENCT15 results demonstrate that the decrease in acute rejection rates from 46% to 15.8% between 1990 and 2002 was accompanied by a non-significant increase in mean graft survival, after adjusting for patient death (15 years vs 19 years). Finally, in Spain, a single-hospital study involving more than 1400 cases demonstrated that mean graft survival increased significantly by almost 1 year between 1985-1995 and 1996-2005.23

The results for graft survival must always be evaluated in the context of demographic changes in the donor and recipient populations. In order to overcome this limitation, the GEENCT performed a case-control study pairing the population of all 4 cohorts based on 6 donor and recipient variables.24 This study showed that the decrease in acute rejection rates was correlated with a significant improvement in long-term results.

Finally, it appears that a substantial portion of the differences reported between countries is associated with the methodologies employed, such that a comparative study between GEENCT patients and patients from the American registry, in which the same methodology was used and after adjusting for confounding factors and patient death, demonstrated a similar 10-year graft survival rate between the two countries (75.6% vs 76%).25

The evaluation of causes of late graft failure has undergone a significant change in recent years. The definition of CTN established by the Banff group in 1991 led to the dominance of this non-specific entity as the leading cause of late graft failure for many years.13 The presence of interstitial fibrosis and tubular atrophy (IF/TA), which defines CTN, is a very common finding in several analyses of surveillance biopsies, and is found in more than 60% of all grafts 1 year after transplantation.12, 26 Several studies have confirmed that the presence of isolated IF/TA in stable grafts is not associated with a worse prognosis for the transplant,27, 28 and that other types of lesions must be identified that might contribute to chronic dysfunction. In Figure 1, different patterns are suggested for explaining the appearance of chronic kidney allograft dysfunction. During the first few months following transplantation, we observe a loss in renal function associated with ischaemia/reperfusion damage and episodes of cellular immunological dysfunction or antibody-mediated dysfunction. Afterwards, many grafts maintain stable renal function for several years, with progressive decreases in renal function only if some triggering event occurs (black line in Figure 1). Recent studies suggest that this phenomenon is often observed in correlation with the appearance of de novo donor-specific antibodies (DSA) in the context of inadequate immunosuppression.29, 30 The histological pattern observed is that of chronic humoral rejection,10 characterised by the presence of microcirculation inflammation in the graft and transplant glomerulopathy (with or without C4d deposits). Non-adherence to immunosuppressant treatments and the use of minimal immunosuppression therapy (due to associated pathologies or other reasons) appear to be the primary causes of this phenomenon. In addition, insufficient treatment may be associated with episodes of late acute rejection (cellular, humoral, or mixed), producing inadequate response to treatment. Other mechanisms may less commonly be associated with this phenotype (recurring or de novo glomerulonephritis or severe concomitant diseases).

A second phenotype of chronic allograft dysfunction that may arise is when the damage produced during the first few months after transplantation leads to a progressive and slow loss in renal function over the following years (dark grey line in Figure 1). Analyses of surveillance biopsies show that persistent acute cellular rejection may affect 5%-10% of all grafts, and could contribute to a progressive loss in functioning nephrons, with progressive IT/TA and glomerulosclerosis.31 In addition, studies of surveillance biopsies taken from patients with pre-formed DSA have revealed a very high percentage of cases involving persistent microcirculation inflammation associated with the appearance of antibody-mediated rejection32 and accelerated graft arteriosclerosis.33 Apart from the persistent immunological damage (mediated by T-cells and/or antibodies), other non-immunological lesions (recurrent or de novo kidney diseases, repeated bacterial and viral infections, and obstructive uropathy) could contribute to the appearance of this second phenotype. For many years, debate has persisted regarding the role of nephrotoxicity from calcineurin inhibitors in the development of chronic allograft dysfunction, since some of the lesions associated with this type of treatment can also be associated with insufficient immunosuppression therapy.34-38 Although there is a general consensus that nephrotoxicity contributes to the progression of chronic kidney disease (CKD), recent studies suggest that, alone, this isolated lesion is only rarely the cause of chronic allograft dysfunction.17, 30 Finally, the characteristics of the allograft can also affect prognosis very significantly.39, 40In this manner, recipients of organs from expanded-criteria donors (light grey line in Figure 1) reach significantly lower levels of renal function during the first few months following transplantation. In this context, mechanisms of accelerated senescence42 and/or hyperfiltration41 can mediate the progression of renal failure without any new event directly participating in the development of new lesions to the allograft.

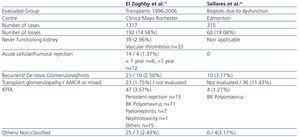

Table 1 summarises two recent studies that have reviewed the contribution of the aforementioned causes to graft failure. The study design and terminology employed in each of these are responsible for a large portion of the differences observed. Finally, it is possible that the combination of several factors is fundamentally responsible for chronic allograft dysfunction.

2. IDENTIFICATION OF RISK FACTORS FOR GRAFT FAILURE

The analysis of risk factors for short and long-term graft failure has recently been revised.43,44 In order to carry out an adequate analysis, we must separate the respective variables present at the moment of TX from those that appear afterwards, since on many occasions, these reflect the result of an interaction between the clinical and immunological characteristics of the donor and recipient. Table 2 displays the pre- and post-transplant variables that are related to kidney graft survival, including immunological and non-immunological factors. The demographic characteristics of both donors and recipients and their associated comorbidities stand out among the non-immunological factors. Pre-transplant immunological factors are particularly important in determining the evolution of the kidney graft. Pretansplant sensitisation due to transfusions, pregnancy, or previous transplantations are all consistently associated with decreased graft survival. In the era of modern immunosuppression, the impact of HLA compatibility on graft survival is lower, but in studies from a registry with a large number of patients, the number of HLA compatibilities continues to maintain its correlation with graft survival.45

The impact of post-transplant variables on graft survival varies according to study. The various comorbidities that can affect transplant recipients (arterial hypertension, diabetes, viral infections, and recurrence of underlying disease) are associated with variables inherent to the patient (such as age and obesity), but are also related to the type of immunosuppression received. Immunosuppression regimens involving maintenance therapy with tacrolimus, mycophenolate mofetil, and corticosteroids are associated with a higher prevalence of diabetes, arterial hypertension, and viral infections, whereas regimens based on mTOR (mammalian target of rapamycin) inhibitors are associated with a higher prevalence of dyslipidaemia, but a lower prevalence of arterial hypertension and viral infections. Several clinical trials and meta-analyses have examined the risks and benefits of removing corticosteroid treatment,46 but we must not forget that some types of glomerulonephritis can recur more frequently after their removal,47 and the evolution of DSA under this strategy remains unclear.

Acute rejection can have a negative impact of the first order on patient evolution. Recent studies have shown that episodes of acute cellular rejection that react to corticosteroid therapy with complete recovery of renal function have no impact on survival. However, episodes of severe acute rejection, rejection with a vascular component, and acute antibody-mediated rejection are associated with decreased graft survival.48 In addition, episodes of late acute rejection are associated with insufficient immunosuppression therapy, and are characterised by inadequate response to treatment.48 Other studies with surveillance biopsies have shown that sub-clinical episodes of cellular or antibody-mediated rejection are associated with a worse prognosis for graft survival.31-33 Regardless, in the modern era of immunosuppression, the prevalence of sub-clinical rejection is very low (less than 5%) in patients with low immunological risk.49, 50 Finally, non-adherence to immunosuppressant regimens has been associated both with the appearance of episodes of acute late rejection and with DSA and chronic humoral rejection.30 Currently, the only available tool for evaluating adherence to treatment is monitoring blood levels for immunosuppressant drugs, although several studies have demonstrated that non-adherence is correlated with several variables related to the recipient (adolescents, low socio-economic standing, etc.).

Acute post-TX tubular necrosis is associated both with a greater risk of acute rejection and with a greater risk for chronic dysfunction, regardless of the presence of rejection.51 Renal function parameters at 3-6 months post-TX, the presence of proteinuria (even at low levels of 0.15-1g/day), and progression of renal function/proteinuria from months 3 to 12 are also associated with late graft failure.15, 52 Other variables that have been less commonly used to monitor graft health, such as resistive index obtained by Doppler ultrasound53,54 and findings from surveillance biopsies,26 have also been associated with graft failure.

3. THERAPEUTIC STRATEGIES FOR MINIMISING GRAFT FAILURE

Therapeutic strategies for reducing the rate of graft loss must act upon the aforementioned risk factors. Obviously, we cannot control the demographic variables and comorbidities of recipients and donors, but these variables must be taken into account when assigning recipients for each donated organ in order to maximise the viability of transplant results. One of the simplest proposals is the use of organs from elderly donors for elderly recipients,55, 56 although some studies have shown that the benefits obtained by this method of assignment are scarce, and limit access to transplants for younger recipients.57-59 It has also been proposed that organs obtained from expanded-criteria donors be used for non-sensitised recipients of more than 60 years of age (or more than 40 years for diabetics), or for recipients with severe issues with their vascular access.60 Alternatively, various algorithms have been developed with the goal of optimising the results of a given transplant by taking into account the principles of equality (equal opportunity for all recipients on the wait list), efficiency (minimising the rate of graft loss due to the death of patients with a functioning graft), and usefulness (maximising the number of patients with a functioning graft in order to minimise the rate of re-entry onto the wait list). The necessary algorithms for these systems of organ assignment are complex and require the collaboration of statisticians and mathematicians who take into account the characteristics of the population and the impact that these variables have on graft survival. Figure 2 displays the algorithm proposed by Baskin-Bey and Nyberg,61, 62 taking into account risk scores for the donor and recipient and following the principle that the number of donors and recipients at each risk level is not homogeneous, and as such, the number of patients at each risk range for recipients must be adjusted to the availability of organs based on donor score.

Ischaemia-reperfusion damage is one of the limiting factors for renal function in the immediate post-transplant period, which can lead to acute tubular necrosis. The two most useful strategies for attenuating this damage, especially in high-risk grafts (expanded-criteria donors and non-heart beating donors), is to minimise cold ischaemic time63 and to maintain the graft on a pulsatile perfusion machine rather than in cold storage.64

The immunosuppression regimen used can play a key role in determining graft survival. Currently, the majority of centres use a combination of a calcineurin inhibitor (tacrolimus or cyclosporine), an anti-proliferative agent (mycophenolate mofetil or azathioprine), and corticosteroids. Treatment with tacrolimus has been associated with a lower rate of clinical and sub-clinical acute rejection, better renal function, and improved graft survival than treatment with cyclosporine or sirolimus in clinical trials and meta-analysis.65, 66 In contrast, the benefits of mycophenolate mofetil in short and mid-term results67 have not always been consistently confirmed.68, 69 Regardless, the choice of what type of immunosuppression to administer must be balanced with the toxicities inherent to each medication. Tacrolimus is associated with a greater prevalence of post-transplant diabetes and neurotoxicity,65 whereas mTOR inhibitors produce a lower degree of renal toxicity, lower prevalence of viral infections, and lower prevalence of cancer. Studies have shown that modifications to immunosuppression regimens over the course of patient follow-up must be accompanied by monitoring of the immune response through measuring anti-HLA antibodies.15

Arterial hypertension affects more than 75% of all recipients, and is correlated with renal function and treatment with a variety of immunosuppressants.70 Treatment with calcium channel blockers counteracts the vasoconstriction induced by calcineurin inhibitors and is associated with improved renal function and graft survival.71 In contrast, the use of renin-angiotensin-aldosterone system inhibitors, which can reduce graft fibrosis and proteinuria and improve graft survival, has provided contradictory results.72-74 Although their use has been associated with reduced glomerular filtration rates and more severe anaemia,75 the GEENCT analysis suggests that their use could also be associated with reduced graft loss when started early.76 Finally, the necessary treatment regimen, including diuretics, must be administered to maintain blood pressure below 130/80mm Hg, keeping in mind that many patients have a circadian pattern to their blood pressure values (non-dipper or reverse dipper), which has also been related to graft survival.77 No clinical trials have evaluated whether chronotherapy can modify patient or graft survival.

Pre- and post-transplant diabetes has been associated with graft survival, producing greater rates of mortality and graft failure.78 As such, proper control of glycaemia through diet, lifestyle habits, and the use of oral anti-diabetics and/or insulin can increase graft survival. Studies have suggested that the changes produced by diabetic nephropathy appear earlier in transplanted kidneys than in native kidneys.79

Dyslipidaemia affects more than 50% of kidney recipients, and its treatment can reduce the rate of cardiovascular events. However, although one clinical trial demonstrated a slower progression of graft vasculopathies after 6 months,80 the use of statins does not seem to modify graft survival based on results from clinical trials and registry analyses, including the data from the GEENCT.81,82

Anaemia is a common complication in patients receiving kidney transplants (35%-40%) and has a substantial impact on patient and graft survival. Treatment with erythropoiesis-stimulating agents in patients with chronic allograft dysfunction reduces the requirement for transfusions and improves patient quality of life.83 The target haemoglobin level for patients receiving kidney transplants with chronic dysfunction has yet to be established, and although it can be assumed to be the same as in individuals without transplants (10-12g/dl), a recent clinical trial suggests that normalised haemoglobin levels (13-15g/dl) are safe and are associated with a reduced progression of renal failure after 2 years (2.4ml/min/1.73m2 in the normalisation group vs 5.9ml/min/1.73m2 in the partial correction group).84

4. PREDICTING GRAFT SURVIVAL

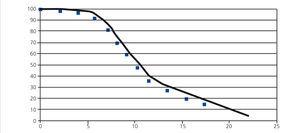

Chronic allograft dysfunction is characterised by a progressive deterioration in glomerular filtration rate (GFR) over time, which can be modelled in different ways. The exponential model has been widely used to estimate graft half-life, defined as the time elapsed until 50% of grafts have failed. Unfortunately, in the context of TX, this type of model tends to overestimate half-life for cohorts of patients with a short follow-up period.3, 4, 15 In effect, using the GEENCT data, Seron et al.15 evaluated the goodness of fit for 5 models (exponential, Weibull, gamma, log-normal, and log-linear) to estimate graft half-life, concluding that the exponential model over-estimates graft half-life (between 1990 and 2002, an increase from 14 years to 52 years), whereas the other models had a similar goodness of fit and confirmed a non-significant increase in graft half-life between 3 and 4 years. Figure 3 displays an example of the log-linear distribution that provides the best fit.

In order to monitor loss in renal function, we can also measure the decrease in GFR over time. Using data from an American registry, Srinivas et al.85 demonstrated that between 2003 and 2008, the evolution of renal function during the first 2 years post-transplant changed. During this period, GFR after 6 months increased from 53.1ml/min to 56.5ml/min, and the rate of loss of GFR between 6 and 12 months decreased from -1.18ml/min to +0.09ml/min, and from 12 to 24 months, the mean loss decreased from -4.29ml/min to 0ml/min. With this type of approximation, we can also model the progression of CKD, whether using linear models86 or more complex models that take into account the fact that progression will be more accelerated at more advanced stages of CKD. In this context, Khalkhali87 developed a model using a cohort of 214 patients with progressive CKD from the 1534 patients monitored during the period of 1997-2005. In this study, patients spent less time at each stage as CKD progressed (stage 1: 26.4 months; stage 2: 24.7 months; stage 3: 22.0 months; and stage 4: 18.5 months).

Other studies have analysed the risk factors associated with this progression or failure. In some models, only pre-transplant variables are taken into account,68, 86-91 whereas others also include post-transplant variables (Table 3).92 Several studies have compared the goodness of fit of various scores. The donor risk score defined by Schold et al.90 provides one of the best fits with the development of stage 4 CKD one year after transplantation.93 In other studies, histological variables have been included in addition to clinical variables. Anglicheau et al.40 demonstrated the predictive value of a score composed of the percentage of sclerosed glomeruli in donor biopsies, donor hypertension, and serum creatinine >1.5mg/dl before organ extraction for predicting reaching a GFR<25ml/min (receiver operating statistic [ROC] area under the curve: 0.84).

Finally, although clinical (acute rejection, creatinine, and proteinuria) and histological data from surveillance biopsies have been associated with long-term graft survival, the long-term predictive capacity of these data is insufficient.26,94,95 For example, although the relative risk of graft failure increases by a magnitude of 2.2 for every mg/dl in serum creatinine after one year, its predictive value in terms of ROC curves on graft failure after 7 years only provides an area under the curve of 0.62. The same occurs with CKD progression: measured as the inverse of creatinine, this parameter produces an area under the curve of 0.55. In fact, it has been proposed that neither acute rejection nor serum creatinine levels are appropriate measures of long-term graft survival.96

KEY CONCEPTS

1. Kidney allograft failure is an issue of the first order in the treatment of chronic kidney disease, since this is one of the more common causes for reintegration into dialysis programmes and the wait list.

2. The introduction of new immunosuppressant drugs has allowed for reducing acute rejection rates and improving long-term graft survival. At the same time, the combination of these drugs has contributed to reducing the rate of late graft failure.

3. An understanding of the causes and risk factors for allograft loss could facilitate early intervention on both immunological and non-immunological risk factors in order to prevent this graft failure.

4. Donor-related factors have a major influence on kidney graft survival. Algorithms designed to allocating organs to the appropriate recipient in order to optimise results are complex and difficult to implement in daily practice.

5. Continuous changes to the demographics and comorbidities of donors and recipients oblige us to continuously monitor changes in transplant results. At present, we do not have sufficiently precise models for determining long-term graft survival.

Acknowledgements

This study was financed in part by the Spanish Ministry of Science and Innovation (Carlos III Health Institute, FIS PI10/0185 and PI10/01020), REDINREN RD12/0021/0015, the I Novartis-SET fund, and the Andalucía Health Department (PI-0499/2009).

Conflicts of interest

The authors declare that they have no conflicts of interest related to the contents of this article.

Table 1. Causes of kidney graft failure in 2 case series

Table 2. Risk factors associated with graft failure

Table 3. Risk scores for graft loss

Figure 1. Different patterns of evolution of kidney function in kidney transplants with chronic allograft dysfunction

Figure 2. Algorithm for calculating risk scores among donors and recipients

Figure 3. Log-normal survival function